Loading Data from

Object Storage, Azure Blob Storage and AWS S3 to Oracle Database

Part 2

Author: Donatien MBADI OUM, Oracle Consultant

1.

Introduction

A multicloud environment is one where

an enterprise uses more than one cloud platform (with at least two or more

public clouds) that each delivers a specific application or service. A

multicloud combines on-premise operations with services and applications

running on multiple public cloud providers, which enables organizations to

capture the benefits of each platform while mitigating their downsides.

While using resources from multiple

cloud vendors, you usually deal with migrating data across the environments or

keeping it on one cloud environment while accessing it from another. The Oracle

DBMS_CLOUD is cloud native package which allows you to access data on OCI

Object Storage, Azure Blob Storage and Amazon S3 buckets and import it into an

Oracle Database from these sources. DBMS_CLOUD package is pre-installed,

configured and maintained in Oracle Autonomous database.

In this article, we are going first to

describe step-by-step procedure on accessing and importing data from Oracle

Object Storage, Azure Blob Storage and Amazon S3 buckets.

2.

Loading Data from Oracle Object Storage

Your user or role is now properly

configured and enabled to using DBMS_CLOUD. Now, you will learn on how to

accessing data in Object Storage that is not public.

We are going to describe this

procedure in the following steps

1-

Create an Object Storage Bucket in the Oracle Cloud

Infrastructure (OCI) and upload sample datasets into the bucket

2-

Create a credential to access to the Object Storage Bucket

3-

Create an External Table that maps datasets

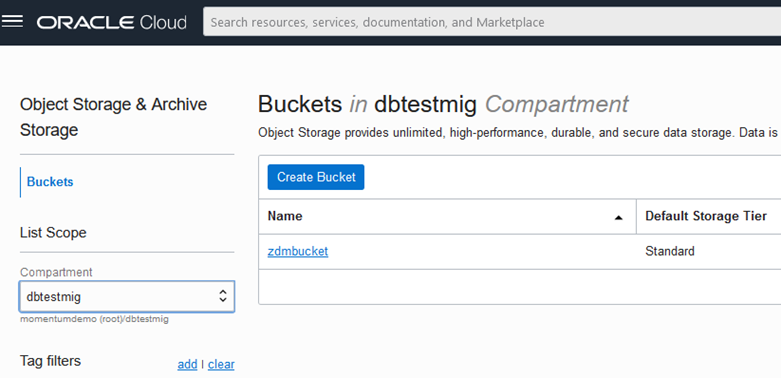

2.1.Creating and Object Storage Bucket

To create an Object Storage Bucket,

Navigate on Storage Menu

-

Click on Buckets

-

Click on Create Bucket and enter the Bucket Name

-

Click on Create to create a standard bucket.

-

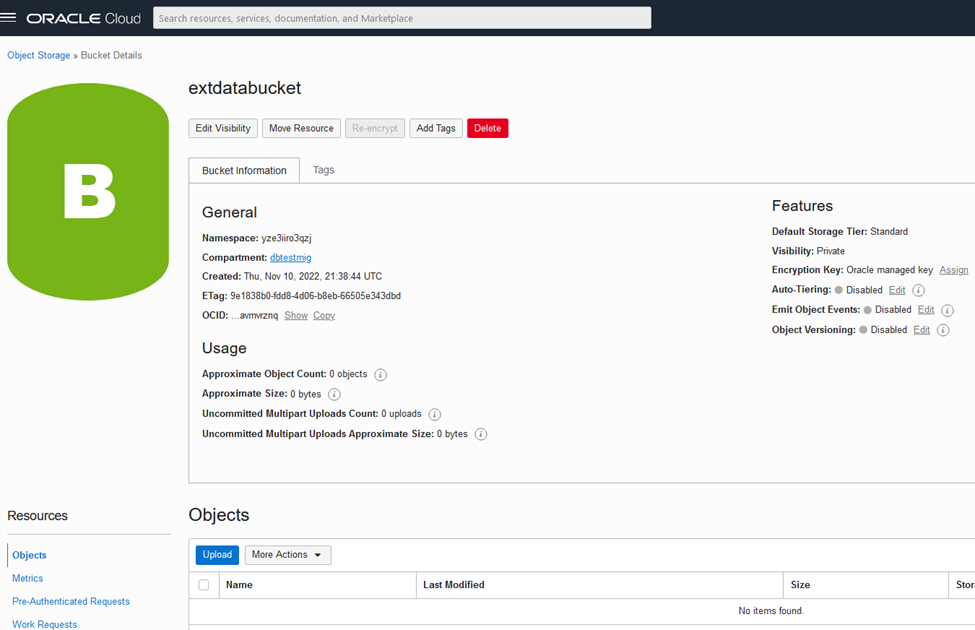

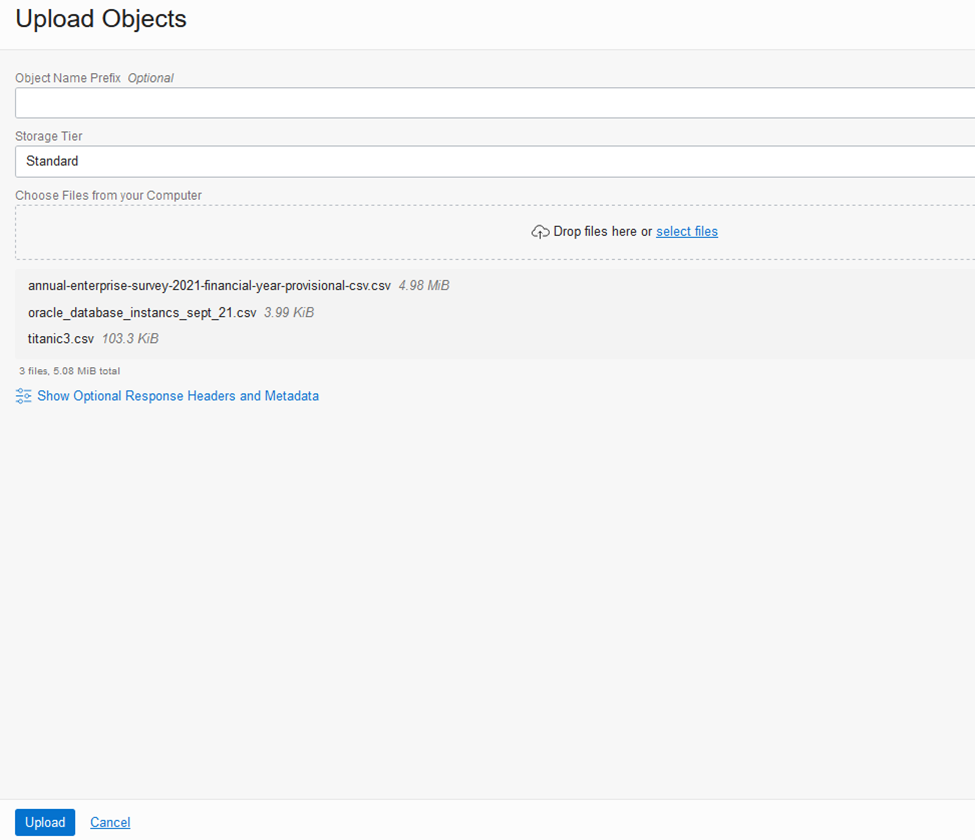

Upload some files in the Bucket

-

Click on Upload

-

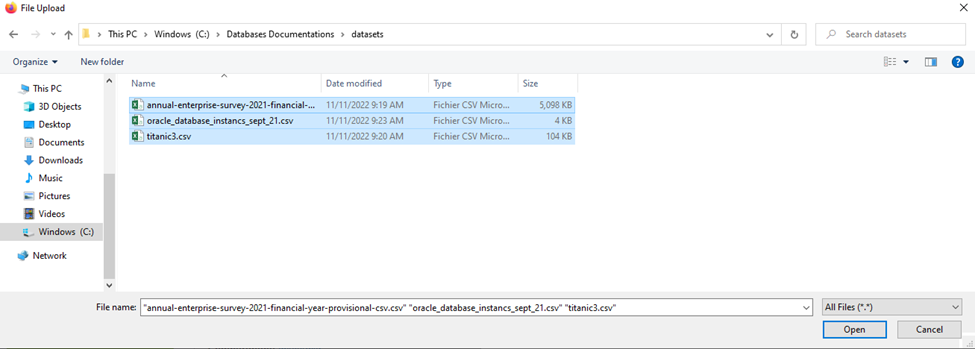

Click on Select files

-

Select your files and Click Open

-

Click Upload

-

Click on Close

-

You can view the object details by clicking on the three

buttons in the right of each object.

-

Click on View Object Details

2.2.Creating a credential to access to the

object storage

Your need first to authenticate with

OCI user in the tenancy that has appropriate privileges to the object storage

bucket in the region in question.

You need to create either an OCI API

signing key or an auth token for a user in your tenancy.

-

Log to you tenancy and under the user profile, click on User

settings.

-

Under Resources, Click on Auth Tokens

-

Click on Generate Token an enter the description

-

Click on Generate Token

-

Click on Copy

Once you have copied your token, you

are ready to create a Credential using the following script:

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'your credential name',

username => 'OCI within your tenancy',

password => 'auth token generated for OCI user'

);

END;

/

Where:

-

credential_name is the name you need to give to your credential. Ex. ORCLCRED

-

username is

your cloud account name. Ex. myemailadress@email.com

-

password is

the string that you copied when creating the token

Log to your database schema (or your

pluggable database) and run the script.

-

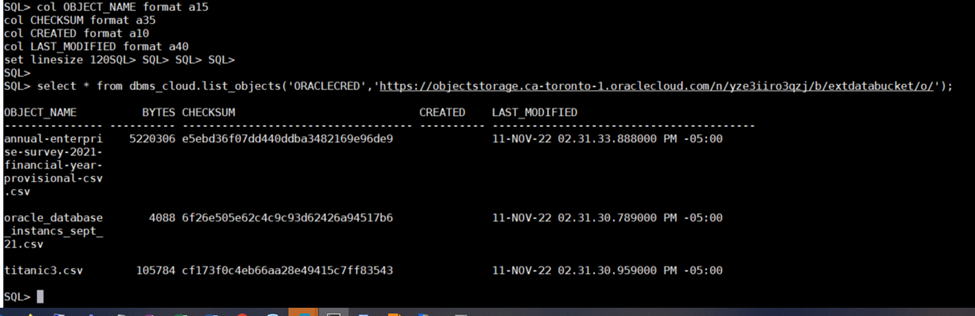

You can run the following SQL statement to list all the

credentials created on you schema

SQL>

select *

from USER_CREDENTIALS ;

-

You can also run the following SQL statement to list all the

objects from the storage object

select * from

dbms_cloud.list_objects(<'CredentialName'>,'https://objectstorage.<region>.oraclecloud.com/n/<ObjectStorageNameSpace>/b/<BucketName>/o/');

You can have the URL details on the

object details

3.

Loading Data from Azure Blob Storage

For testing purposes network

connectivity can be established over Internet, for the production environments,

the Architect must set up a private dedicated connection between Azure and OCI

via the Azure and OCI Interconnect or 3rd party network provider.

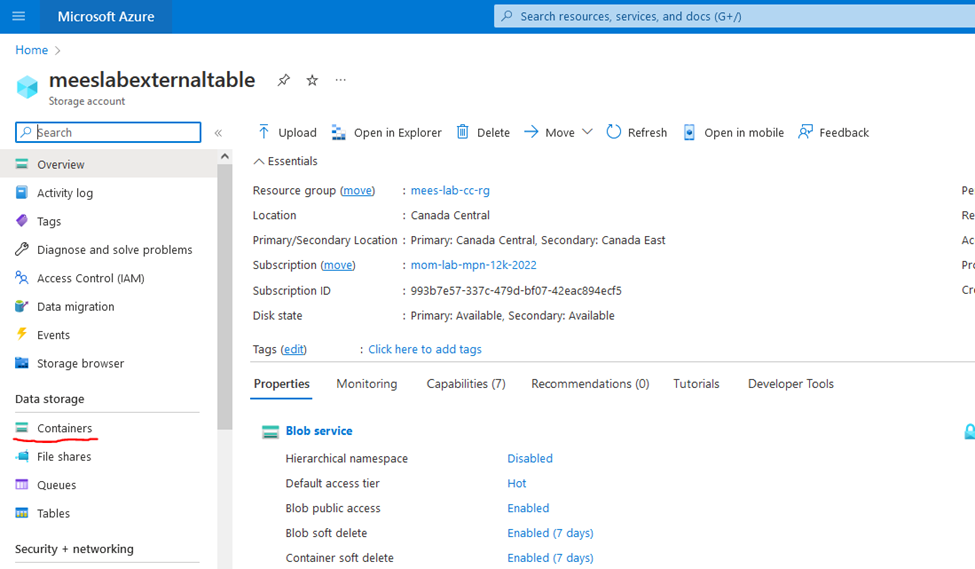

3.1.Create a container on Azure

To create a Container, Log on Azure

with your Azure account and Click on your storage account. Click on Containers

-

Click Container to create a new container

-

Enter the name of container

-

Click on Create

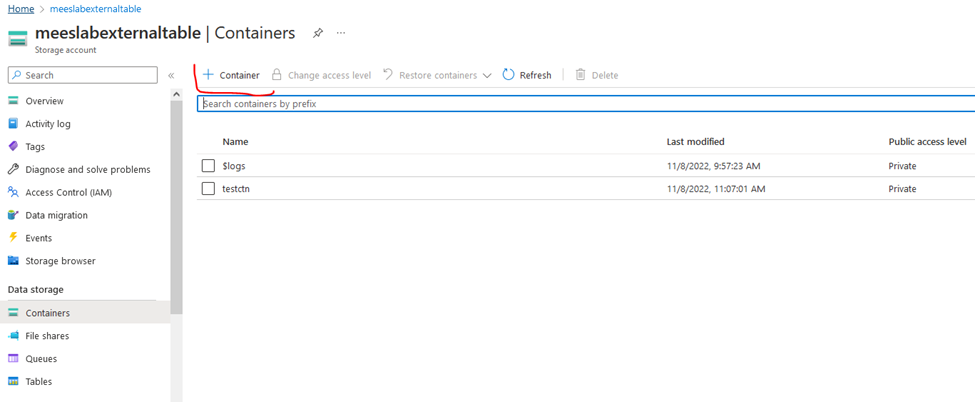

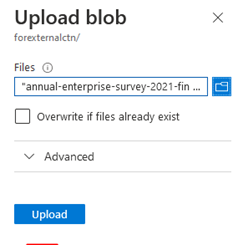

3.2.Upload files on Azure

-

Click on a container you just create

-

Click on Upload

-

Select the files you need to upload and click on Open

-

Click on Upload

3.3.Copy the Access Key of your storage

account

-

From your account page, Click on Access Key in the

Security+Networking section.

-

Click on Show (text will changed to Hide)

-

Copy the key

3.4.Creating the credentials in Oracle

Database

Once you have copied the key, you are

ready to create a Credential using the following script:

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'your credential name',

username => 'Storage Account Name',

password => 'Key'

);

END;

/

Where:

-

credential_name is the name you need to give to your credential. Ex. AZURECRED

-

username is

the name of your storage account

-

Key is

the copied key

-

You can run the following SQL statement to list all the

credentials created on you schema

SQL>select * from

USER_CREDENTIALS;

-

You can also run the following SQL statement to list all the

objects from the Azure Blob storaget

select * from

dbms_cloud.list_objects(<'CredentialName'>,URL of the object');

From the container account, choose

Containers and click on the container name where your account reside. Click on

the target object name and copy the Object URL

4.

Loading Data from Amazon S3

For testing purposes network

connectivity can be established over Internet, for the production environments,

the Architect must set up a private dedicated connection between AWS and OCI

via the Azure and OCI Interconnect or 3rd party network provider.

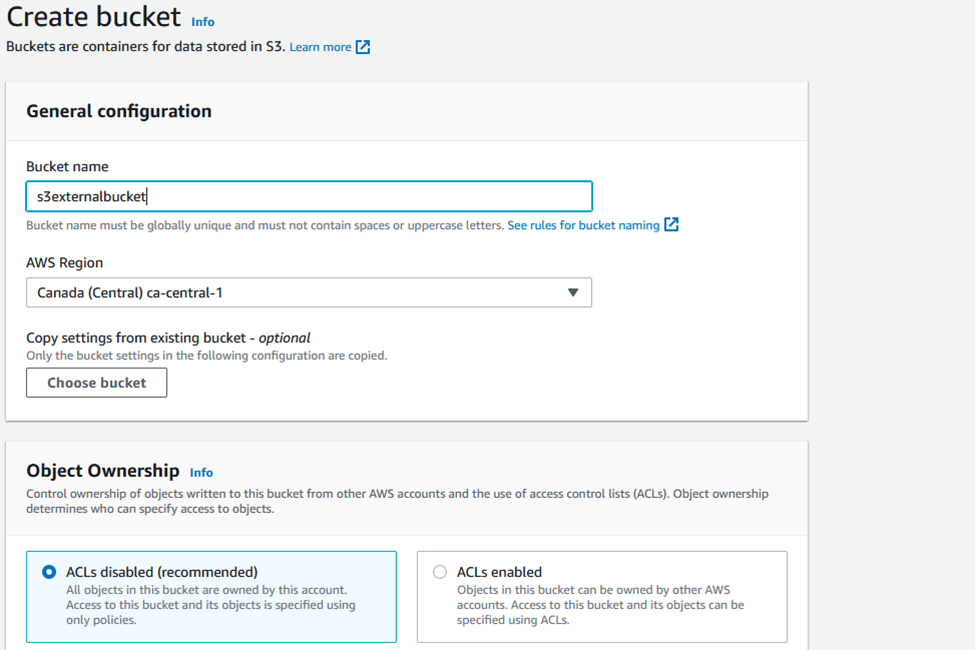

4.1.Create a S3 bucket

To create a S3 bucket, Log on AWS with

your account and Click on S3 service

-

Click on Create bucket

-

Enter the S3 bucket name

-

Click on Create bucket

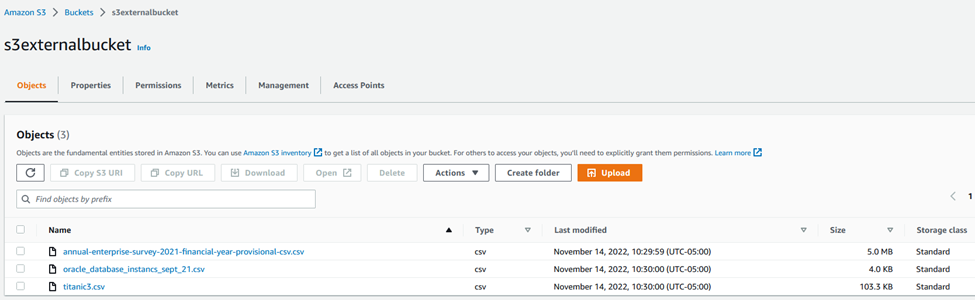

4.2.Upload files to S3 bucket

-

Click on a bucket name you just created

-

Click on Upload

-

Click on Add files

-

Select the files you need to upload and click on Open

-

Click on Upload

-

Click on Close

4.3.Grant AWS IAM user access privileges

to S3 bucket

-

Go to IAM (Identity and Access Management) service.

-

Choose Users in the Access Managament section and Click on a

group assigned to your user.

-

Choose Permissions tab and add a policy that allows access to

the S3 bucket- where your data is stored. For example

4.4.Create access key for AWS IAM user

-

From IAM page, choose users and click on your user name.

-

Choose Security credentials tab

-

Click on Create access key

-

You will prompt to download a .csv file that contains your

Access key and Secret access key. For example:

|

Access key ID |

Secret access key |

||

|

AKIA3FPEZEFPUHIV76H6 |

xXwUh6thduDxE58jWDzW0+c1qKGdtmluFqHqj7nT |

||

|

|

|

||

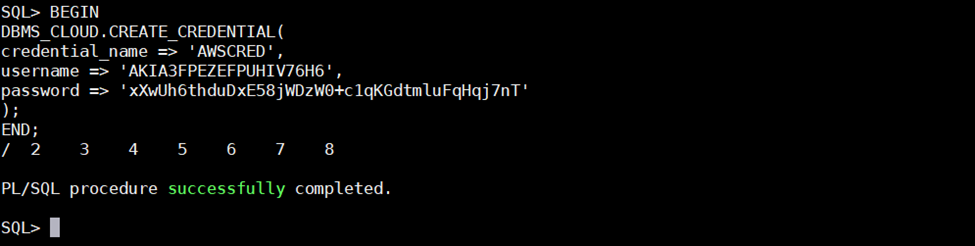

4.5.Creating the credentials in Oracle

Database

Once you have the access key and the

secret access key, you are ready to create a Credential using the following

script:

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'your credential name',

username => '<Access_Key_ID>',

password => '<Secret_Access_Key>'

);

END;

/

Where:

-

credential_name is the name you need to give to your credential. Ex. AZURECRED

-

Access_Key_ID is the access key

-

Secret_Access_Key is the secret access key

-

You can run the following SQL statement to list all the

credentials created on you schema

SQL>select * from

USER_CREDENTIALS;

-

You can also run the following SQL statement to list all the

objects from the AWS S3 bucket

select * from

dbms_cloud.list_objects(<'CredentialName'>,'URL of the object');

Get the URL of the object stored in a

S3 bucket that you want to import into your Oracle Database. From S3 page,

choose bucket

-

Click on the bucket name

-

Click on any object

-

Copy the link

Example :

https://s3externalbucket.s3.ca-central-1.amazonaws.com/titanic3.csv

-

Run the following script

SQL>select * from dbms_cloud.list_objects('AWSCRED','https://s3externalbucket.s3.ca-central-1.amazonaws.com');

5.

Access Data using an External table from Oracle Object Storage

For some case, we can need to keep

files on Objet Storage or Azure Blob Storage etc. and need accessing the data

through an Oracle Database. You can then use an external table pointing to the

files using CREATE_EXTERNAL_TABLE procedure of the DBMS_CLOUD package.

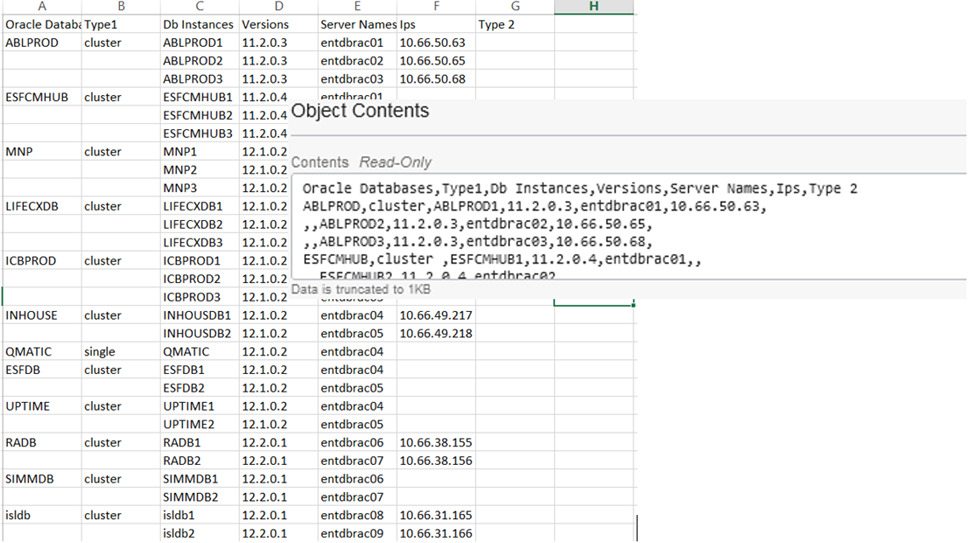

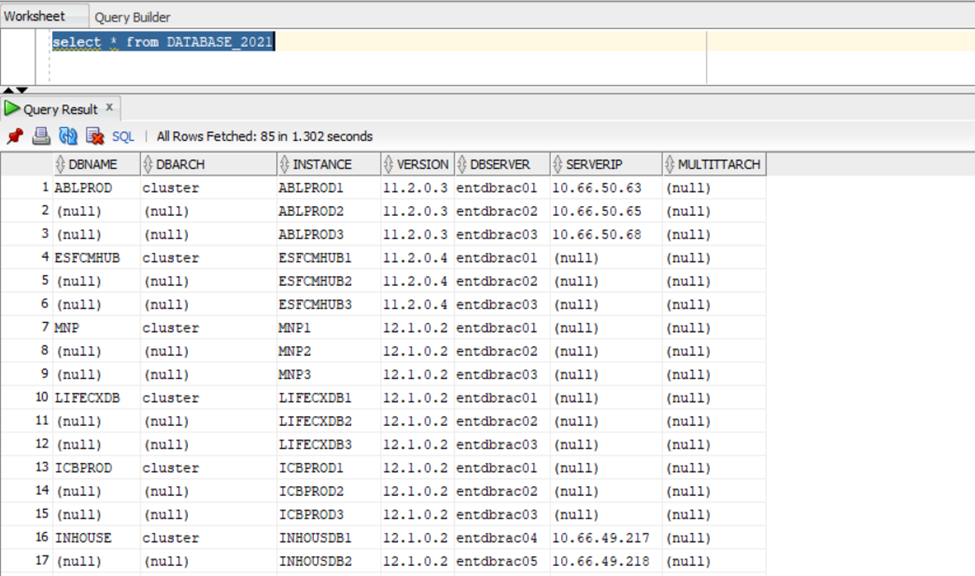

For example, let’s the file oracle_database_instancs_sept_21.csv stored on Object Storage.

Creating the external tables is very

straightforward. You specify the format being loaded using the json_object

function. The supported format are csv, orc, avro and parquet.

The following script create an

external table that we want:

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'DATABASE_2021',

credential_name => 'ORACLECRED',

file_uri_list => 'https://objectstorage.ca-toronto-1.oraclecloud.com/n/yze3iiro3qzj/b/extdatabucket/o/oracle_database_instancs_sept_21.csv',

format => json_object('type' value 'csv', 'skipheaders' value '1'),

field_list => 'DBNAME,

DBARCH,

INSTANCE,

VERSION,

DBSERVER,

SERVERIP,

MULTITTARCH',

column_list => 'DBNAME VARCHAR2(20),

DBARCH VARCHAR2(20),

INSTANCE VARCHAR2(20),

VERSION VARCHAR2(10),

DBSERVER VARCHAR2(20),

SERVERIP VARCHAR2(20),

MULTITTARCH VARCHAR2(15)');

END;

/

You can verify that the external table

contents are mapping as expected with the following procedure:

BEGIN

DBMS_CLOUD.VALIDATE_EXTERNAL_TABLE(

table_name =>

'DATABASE_2021',

schema_name =>

'HR'

);

END;

/

The output of this statement is placed

into two tables called VALIDATE$x_LOG and VALIDATE$x_BAD.

Example:

SQL> select * from

VALIDATE$6_LOG;

RECORD

----------------------------------------------------------------------------

LOG file opened at 11/14/22 17:57:23

Total Number of Files=1

Data File: https://objectstorage.ca-toronto-1.oraclecloud.com/n/yze3iiro3qzj/b/e

xtdatabucket/o/oracle_database_instancs_sept_21.csv

Log File:

VALIDATE$6_23414.log

RECORD

-----------------------------------------------------------------------------

LOG file opened at 11/14/22 17:57:23

Total Number of Files=1

Data File:

https://objectstorage.ca-toronto-1.oraclecloud.com/n/yze3iiro3qzj/b/e

xtdatabucket/o/oracle_database_instancs_sept_21.csv

RECORD

-----------------------------------------------------------------------------

Log File:

VALIDATE$6_23414.log

LOG file opened at 11/14/22 17:57:23

Bad File:

VALIDATE$6_23414.bad

Field Definitions for

table DATABASE_2021

Record format DELIMITED BY

Data in file has same endianness as the

platform

RECORD

--------------------------------------------------------------------------------

Rows with all null fields are accepted

Fields in Data Source:

DBNAME CHAR (255)

Terminated by ","

Enclosed by """ and

"""

DBARCH CHAR (255)

Terminated by ","

Enclosed by """ and

"""

INSTANCE CHAR (255)

RECORD

--------------------------------------------------------------------------------

Terminated by ","

Enclosed by """ and

"""

VERSION CHAR (255)

Terminated by ","

Enclosed by """ and

"""

DBSERVER CHAR (255)

Terminated by ","

Enclosed by """ and

"""

SERVERIP CHAR (255)

Terminated by ","

Enclosed by """ and

"""

RECORD

--------------------------------------------------------------------------------

MULTITTARCH CHAR (255)

Terminated by ","

Enclosed by """ and

"""

54 rows selected.

SQL> select * from

VALIDATE$6_BAD;

no rows selected

SQL>

Then,

you can use a SQL queries to return data from your external table.

You

can also return the data definition language for the created table:

SQL>SELECT * FROM DBMS_METADATA.GET_DDL('TABLE','DATABASE_2021')

CREATE TABLE

""HR"".""DATABASE_2021""

( ""DBNAME"" VARCHAR2(20),

""DBARCH""

VARCHAR2(20),

""INSTANCE""

VARCHAR2(20),

""VERSION""

VARCHAR2(10),

""DBSERVER""

VARCHAR2(20),

""SERVERIP""

VARCHAR2(20),

""MULTITTARCH""

VARCHAR2(15)

)

ORGANIZATION

EXTERNAL

( TYPE

ORACLE_LOADER

DEFAULT

DIRECTORY ""DATA_PUMP_DIR""

ACCESS

PARAMETERS

( RECORDS

IGNORE_HEADER=1 DELIMITED BY DETECTED NEWLINE NOLOGFILE NOBADFILE NODISCARDFILE

READSIZE=10000000 CREDENTIAL 'ORACLECRED'

FIELDS CSV

WITHOUT EMBEDDED NOTRIM ( DBNAME,

DBARCH,

INSTANCE,

VERSION,

DBSERVER,

SERVERIP,

MULTITTARCH )

)

LOCATION

(

'https://objectstorage.ca-toronto-1.oraclecloud.com/n/yze3iiro3qzj/b/extdatabucket/o/oracle_database_instancs_sept_21.csv'

)

)

REJECT LIMIT

0

PARALLEL

"

6.

Access Data using an External table from Azure Blob Storage

For

this example, we will create and external table that mapped to file stored in

Azure Blob Storage. Those files contain data concerning restaurant inspection

from 2010 to 2015.

The

first file named dc_restaurant.csv and is as follow:

The

following script create a dc_restaurant

external table on related Oracle Database:

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'DC_RESTAURANT',

credential_name => 'AZURECRED',

file_uri_list => 'https://meeslabexternaltable.blob.core.windows.net/restaurants-inspection/DC_Restaurants.csv',

format => json_object('type' value 'csv',

'skipheaders' value '1','recorddelimiter' value ''),

field_list => 'PERMIT_ID,

NAME,

ADDRESS,

LATTITUDE,

LONGITUDE',

column_list => 'PERMIT_ID NUMBER,

NAME

VARCHAR2(50),

ADDRESS

VARCHAR2(60),

LATTITUDE VARCHAR2(20),

LONGITUDE VARCHAR2(20)');

END;

/

The

following script allow to verify that the external table contents are mapping

as expected.

BEGIN

DBMS_CLOUD.VALIDATE_EXTERNAL_TABLE(

table_name

=> 'DC_RESTAURANT',

schema_name => 'HR'

);

END;

/