MongoDB

High Availability Cluster

Part

2

Replication and Sharding Implementation

By

Donatien MBADI OUM, Database Expert

1. Introduction

In part 1 we presented an Overview of MongoDB High

Availability. The goal of this second part is to be practice by implementing

replication and sharding. On this case, all the MongoDB instances are hosted on

a Linux machine. In real case, separated servers are used with different Ips

addresses.

We will start by setting up our replication by using the following steps:

-

Creating appropriated folders for each MongoDB instance

-

Starting the mongod instances

-

Enabling replication in MongoDB

-

Adding MongoDB instances to replica set

-

Testing the replication process

-

Testing the failover

-

Removing MongoDB instance from replica set

After that we will implement our sharding by following the below steps:

-

Creating

Config Servers for MongoDB

-

Creating

Shard Servers for MongoDB

-

Starting

the Servers to initiate MongoDB Replication

-

Adding

Shards to MongoDB Shard Servers

-

Testing

the Sharding process

2. Configuring

MongoDB Replication

2.1.

Environment

On this tutorial, we are using:

- Oracle VirtualBox 6.1

- Enterprise Linux 8.4

- MongoDB 5.0

2.2.

Architecture

On this tutorial, we create 5 instances

for replication as below:

- One master instance

- Three secondaries’ instances

- An arbiter

2.3.

Creating instance folders

Since we are using a same machine (In

real case, we should have different servers), we create 5 directories, one for

each instance:

/opt/data/repl01/inst01

/opt/data/repl01/inst02

/opt/data/repl01/inst03

/opt/data/repl01/inst04

/opt/data/repl01/arbiter

2.4.

Starting MongoDB instance

We choose repl01 as a name of our

replicat set. To start the mongod instance, we specify the port value for the

Mongo instance along with the path to our MongoDB installation. For each

instance, we will add shardsrv option to prepare a future sharding

configuration in a partitioned cluster.

To start the instances, we have can

either directly running the mongod with all the options you need or by

configuring a configuration file.

We choose the following command to

enable the first mongod instance:

mongod --replSet repl01 --journal --port

27101 --shardsvr --dbpath /opt/data/repl01/inst01 --logpath /opt/data/repl01/inst01/mongod_27101.log

--fork

To use the configuration file, we need

to first copy the existing sample file /etc/mongod.conf.

Eg. For instance inst01 (shard server),

cp /etc/mongod.conf /etc/confs/repl01/inst01/mongod_inst01.conf

#vim /etc/confs/repl01/inst01/mongod_inst01.conf

#

mongod.conf

# for

documentation of all options, see:

#

http://docs.mongodb.org/manual/reference/configuration-options/

# where

to write logging data.

systemLog:

destination: file

logAppend: true

path:

/opt/data/repl01/inst01/mongod_27101.log

# Where

and how to store data.

storage:

dbPath: /opt/data/repl01/inst01

journal:

enabled: true

# engine:

# wiredTiger:

# how

the process runs

processManagement:

fork: true

# fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile

timeZoneInfo: /usr/share/zoneinfo

#

network interfaces

net:

port: 27101

bindIp: 127.0.0.1 # Enter 0.0.0.0,:: to bind to all IPv4 and

IPv6 addresses or, alternatively, use the net.bindIpAll setting.

#security:

#operationProfiling:

replication:

replSetName: "repl01"

sharding:

clusterRole: shardsvr

##

Enterprise-Only Options

#auditLog:

#snmp:

We also create the other instances

including the arbiter:

[root@linuxsvr01]# mongod --replSet repl01 --journal --port 27102

--shardsvr --dbpath /opt/data/repl01/inst02 --logpath /opt/data/repl01/inst01/mongod_27102.log

--fork

about to fork child process, waiting until server is ready for

connections.

forked process: 9704

child process started successfully, parent exiting

[root@linuxsvr01]# mongod --replSet repl01 --journal --port 27103

--shardsvr --dbpath /opt/data/repl01/inst03 --logpath /opt/data/repl01/inst03/mongod_27103.log

--fork

about to fork child process, waiting until server is ready for

connections.

forked process: 9760

child process started successfully, parent exiting

[root@linuxsvr01]#

[root@linuxsvr01 inst01]# mongod --replSet repl01 --journal --port 27104

--shardsvr --dbpath /opt/data/repl01/inst04 --logpath /opt/data/repl01/inst04/mongod_27104.log

--fork

about to fork child process, waiting until server is ready for

connections.

forked process: 9817

child process started successfully, parent exiting

[root@linuxsvr01]# mongod --replSet repl01 --journal --port 27100

--shardsvr --dbpath /opt/data/repl01/arbiter --logpath /opt/data/repl01/inst01/mongod_27100.log

--fork

about to fork child process, waiting until server is ready for

connections.

forked process: 9871

child process started successfully, parent exiting

[root@linuxsvr01]#

Or by using a configuration file for

each instance:

2.5.

Enabling replication

On your production environment, you must

make Ips/Host name configurations on /etc/host files on each server.

Now, we need to open the Mongo Shell

with our primary instance as follows:

[root@linuxsvr01]# mongosh --port 27101

Note: We can still use mongo --port in MongoDB 5.0, but the new shell is mongosh

--port is deprecated.

After connected on the first instance,

we need to initiate the replication as follow:

test>

use admin

switched

to db admin

admin>

admin>

cfg={_id:"repl01",members:[{_id:0,host:"127.0.0.1:27101"},{_id:1,host:"127.0.0.1:27102"},{_id:2,host:"127.0.0.1:27100",arbiterOnly:true}]}

{

_id: 'repl01',

members: [

{ _id: 0, host: '127.0.0.1:27101' },

{ _id: 1, host: '127.0.0.1:27102' },

{

_id: 2, host: '127.0.0.1:27100', arbiterOnly: true }

]

}

admin>

admin>

rs.initiate(cfg);

{ ok: 1

}

repl01

[direct: other] admin>

repl01

[direct: secondary] admin>

2.6.

Adding nodes on replica set

Once we have initialized our Replica

Set, we can now add the various MongoDB instances using the add command as

follows:

>rs.add('127.0.0.1:27103')

The output {‘ok’:1} indicates that a MongoDB instance has

been successfully added to the Replica Set.

Note: We can also add an arbiter as

follow:

repl01:PRIMARY> rs.addArb('127.0.0.1:27100')

To check the status of the replication,

you can use the status command as follows:

>rs.status

repl01

[direct: primary] admin> rs.status()

{

set: 'repl01',

date:

ISODate("2021-09-23T22:33:51.584Z"),

myState: 1,

term: Long("1"),

syncSourceHost: '',

syncSourceId: -1,

heartbeatIntervalMillis:

Long("2000"),

majorityVoteCount: 3,

writeMajorityCount: 3,

votingMembersCount: 5,

writableVotingMembersCount: 4,

optimes: {

lastCommittedOpTime: { ts: Timestamp({ t:

1632436430, i: 1 }), t: Long("1") },

lastCommittedWallTime:

ISODate("2021-09-23T22:33:50.123Z"),

readConcernMajorityOpTime: { ts:

Timestamp({ t: 1632436430, i: 1 }), t: Long("1") },

appliedOpTime: { ts: Timestamp({ t:

1632436430, i: 1 }), t: Long("1") },

durableOpTime: { ts: Timestamp({ t:

1632436430, i: 1 }), t: Long("1") },

lastAppliedWallTime:

ISODate("2021-09-23T22:33:50.123Z"),

lastDurableWallTime:

ISODate("2021-09-23T22:33:50.123Z")

},

lastStableRecoveryTimestamp: Timestamp({ t:

1632436370, i: 1 }),

electionCandidateMetrics: {

lastElectionReason: 'electionTimeout',

lastElectionDate:

ISODate("2021-09-23T22:16:49.982Z"),

electionTerm: Long("1"),

lastCommittedOpTimeAtElection: { ts:

Timestamp({ t: 0, i: 0 }), t: Long("-1") },

lastSeenOpTimeAtElection: { ts: Timestamp({

t: 1632435398, i: 1 }), t: Long("-1") },

numVotesNeeded: 2,

priorityAtElection: 1,

electionTimeoutMillis:

Long("10000"),

numCatchUpOps: Long("0"),

newTermStartDate:

ISODate("2021-09-23T22:16:50.069Z"),

wMajorityWriteAvailabilityDate:

ISODate("2021-09-23T22:16:50.718Z")

},

members: [

{

_id: 0,

name: '127.0.0.1:27101',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 3700,

optime: { ts: Timestamp({ t: 1632436430,

i: 1 }), t: Long("1") },

optimeDate:

ISODate("2021-09-23T22:33:50.000Z"),

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1632435410,

i: 1 }),

electionDate:

ISODate("2021-09-23T22:16:50.000Z"),

configVersion: 3,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

},

{

_id: 1,

name: '127.0.0.1:27102',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 1032,

optime: { ts: Timestamp({ t: 1632436430,

i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t:

1632436430, i: 1 }), t: Long("1") },

optimeDate:

ISODate("2021-09-23T22:33:50.000Z"),

optimeDurableDate:

ISODate("2021-09-23T22:33:50.000Z"),

lastHeartbeat: ISODate("2021-09-23T22:33:50.180Z"),

lastHeartbeatRecv:

ISODate("2021-09-23T22:33:50.174Z"),

pingMs: Long("0"),

lastHeartbeatMessage: '',

syncSourceHost: '127.0.0.1:27101',

syncSourceId: 0,

infoMessage: '',

configVersion: 3,

configTerm: 1

},

{

_id: 2,

name: '127.0.0.1:27100',

health: 1,

state: 7,

stateStr: 'ARBITER',

uptime: 1032,

lastHeartbeat:

ISODate("2021-09-23T22:33:50.180Z"),

lastHeartbeatRecv:

ISODate("2021-09-23T22:33:50.174Z"),

pingMs: Long("0"),

lastHeartbeatMessage: '',

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

configVersion: 3,

configTerm: 1

},

{

_id: 3,

name: '127.0.0.1:27103',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 173,

optime: { ts: Timestamp({ t: 1632436430,

i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t:

1632436430, i: 1 }), t: Long("1") },

optimeDate:

ISODate("2021-09-23T22:33:50.000Z"),

optimeDurableDate:

ISODate("2021-09-23T22:33:50.000Z"),

lastHeartbeat:

ISODate("2021-09-23T22:33:50.180Z"),

lastHeartbeatRecv:

ISODate("2021-09-23T22:33:51.172Z"),

pingMs: Long("0"),

lastHeartbeatMessage: '',

syncSourceHost: '127.0.0.1:27102',

syncSourceId: 1,

infoMessage: '',

configVersion: 3,

configTerm: 1

},

{

_id: 4,

name: '127.0.0.1:27104',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 163,

optime: { ts: Timestamp({ t: 1632436430,

i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t:

1632436430, i: 1 }), t: Long("1") },

optimeDate:

ISODate("2021-09-23T22:33:50.000Z"),

optimeDurableDate:

ISODate("2021-09-23T22:33:50.000Z"),

lastHeartbeat:

ISODate("2021-09-23T22:33:50.180Z"),

lastHeartbeatRecv:

ISODate("2021-09-23T22:33:51.374Z"),

pingMs: Long("0"),

lastHeartbeatMessage: '',

syncSourceHost: '127.0.0.1:27103',

syncSourceId: 3,

infoMessage: '',

configVersion: 3,

configTerm: 1

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1632436430, i:

1 }),

signature: {

hash:

Binary(Buffer.from("0000000000000000000000000000000000000000",

"hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t: 1632436430, i:

1 })

}

repl01

[direct: primary] admin>

We can also see which is the PRIMARY or

MASTER instance in our replication as follows:

>rs.isMaster()

2.7.

Testing the Replication Process

Now, we can test the process by adding a

document in the primary node. If replication is working properly, the document

will automatically be copied into the secondaries node.

We will add the below documents on

compta database:

{idclient:567, nomclient:"YAYA

Moussa", adresse:"Boulevard Amadou Ahidjo",

telephone:"67785-3390"},

{idclient:560, nomclient:"MBADI

Donatien", adresse:"Mbanga Japoma",

telephone:"67000-0000"},

{idclient:356, nomclient:"ONANA Brice

Roland",Ville:"Mbalmayo", telephone:"67785-3390"},

{idclient:580, nomclient:"SANTOUNGA

Yves-Cesaire", adresse:"Brescia Italie"},

{idclient:866,

nomclient:"YENE Yves-Evann", Pays:"Cameroun",

Contact:"MBADI OUM"}

First, connect to the primary node and

add a document using the insertMany command as follows:

repl01 [direct: primary] admin> use compta

switched to db compta

repl01 [direct: primary] compta>

db.clients.insertMany([{idclient:567, nomclient:"YAYA Moussa", adresse:"Boulevard

Amadou Ahidjo", telephone:"67785-3390"},

...

{idclient:560, nomclient:"MBADI Donatien",

adresse:"Mbanga Japoma", telephone:"67000-0000"},

... {idclient:356,

nomclient:"ONANA Brice Roland",Ville:"Mbalmayo",

telephone:"67785-3390"},

...

{idclient:580, nomclient:"SANTOUNGA Yves-Cesaire",

adresse:"Brescia Italie"},

...

{idclient:866, nomclient:"YENE Yves-Evann",

Pays:"Cameroun", Contact:"MBADI OUM"}

... ]);

{

acknowledged: true,

insertedIds: {

'0':

ObjectId("614d062cf545c64ecc90d9a0"),

'1':

ObjectId("614d062cf545c64ecc90d9a1"),

'2':

ObjectId("614d062cf545c64ecc90d9a2"),

'3':

ObjectId("614d062cf545c64ecc90d9a3"),

'4': ObjectId("614d062cf545c64ecc90d9a4")

}

}

repl01 [direct: primary] compta>

We can display documents as follow:

Now, we can switch to any secondary node

using the following command:

E.g: mongosh --port

27103

Once connected on a secondary node, we

try to display documents on clients collection:

We notice that, since we are connected

on a secondary node, we cannot display information because of error 13435.

We can allow a secondary node to accept

reads by running db.getMongo().setReadPref('secondary') in a mongo shell that is connected to

that secondary node. Allowing reads from a secondary is not recommended,

because you could be reading stale data if the node isn't yet synced with the

primary node.

2.8.

Removing node from replica set

MongoDB Replica Sets also allow users to

remove single or multiple instances they’ve added to the replica set using the

remove command. To remove a particular instance, we first need to shut it down.

Example: We need to remove instance

running on port 27104:

[root@linuxsvr01 ~]# mongo --port 27104

Current Mongosh Log ID: 614d0fd44520fc023111d647

Connecting to: mongodb://127.0.0.1:27104/?directConnection=true&serverSelectionTimeoutMS=2000

Using MongoDB: 5.0.2

Using Mongosh: 1.0.5

For mongosh info see: https://docs.mongodb.com/mongodb-shell/

------

The server generated these

startup warnings when booting:

2021-09-23T17:47:12.123-04:00:

Using the XFS filesystem is strongly recommended with the WiredTiger storage

engine. See http://dochub.mongodb.org/core/prodnotes-filesystem

2021-09-23T17:47:12.659-04:00:

Access control is not enabled for the database. Read and write access to data

and configuration is unrestricted

2021-09-23T17:47:12.659-04:00:

You are running this process as the root user, which is not recommended

2021-09-23T17:47:12.659-04:00:

/sys/kernel/mm/transparent_hugepage/enabled is 'always'. We suggest setting it

to 'never'

2021-09-23T17:47:12.659-04:00:

Soft rlimits for open file descriptors too low

------

Warning: Found ~/.mongorc.js, but not ~/.mongoshrc.js. ~/.mongorc.js

will not be loaded.

You may want to copy or rename

~/.mongorc.js to ~/.mongoshrc.js.

repl01 [direct: secondary] test>

repl01 [direct: secondary] test>

repl01:SECONDARY> use admin

switched to db admin

repl01:SECONDARY> db.shutdownServer()

MongoNetworkError: connection 3 to 127.0.0.1:27104

closedrepl01:SECONDARY>

Once we have shut down the server, we

need to connect with our primary server and use the remove command as follow:

repl01 [direct: primary]

admin> rs.remove('127.0.0.1:27104')

{

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1632440665, i:

1 }),

signature: {

hash:

Binary(Buffer.from("0000000000000000000000000000000000000000",

"hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t: 1632440665, i:

1 })

}

repl01 [direct: primary]

admin>}

After remove the instance, we can run

the command rs.isMaster() to check the instance roles.

2.9.

Testing the failover

This exercise consists of simulate the loss

of PRIMARY instance and then see what happens concerning the remaining nodes.

Let’s first add the node that we removed

and then display the status of our replica set using rs.status():

As we can see, the PRIMARY instance is

running on port 27101.

So, we will shut down the primary

instance by killing the mongod process which is running instance 1.

The former primary instance is now down.

Let’s connect to any other node and check which one is the new primary.

We can see that the new PRIMARY instance

is running on port 27103.

Let’s restart the former primary

instance and see the new status.

The instance which running on 27101 is

now turned as SECONDARY instance.

2.10. Force a member to

be Primary by Setting its Priority High

You can force a replica

set member to become primary by giving it a higher members[n].priority value than any other member in the

set.

Optionally, you also can force a member

never to become primary by setting its members[n].priority value to 0, which means the member can never seek election as primary.

This procedure assumes the below

configuration (This is part of rs.status command output):

…

…

members:

[

{

_id: 0,

name:

'127.0.0.1:27101',

health: 1,

state: 2,

stateStr: 'SECONDARY',

…

…

},

{

_id: 1,

name: '127.0.0.1:27102',

health: 1,

state: 1,

stateStr: 'PRIMARY',

…

…

{

_id: 2,

name: '127.0.0.1:27100',

health: 1,

state: 7,

stateStr: 'ARBITER',

},

{

_id: 3,

name: '127.0.0.1:27103',

health: 1,

state: 2,

stateStr: 'SECONDARY',

},

{

_id: 4,

name: '127.0.0.1:27104',

health: 1,

state: 2,

stateStr: 'SECONDARY',

}

]

…

…

The procedure assumes that

127.0.0.1/27102 is the actual primary node. We need to make 127.0.0.1/101 to be

the primary node.

We have four members and one arbiter. In

a mongosh session that is connected to the

primary 127.0.0.1/27102, we use the following sequence of operations to make 127.0.0.1/27101

the primary:

cfg =

rs.conf()

cfg.members[0].priority = 1

cfg.members[1].priority

= 0.5

cfg.members[2].priority

= 0 --Arbiter

cfg.members[3].priority

= 0.5

cfg.members[4].priority

= 0.5

rs.reconfig(cfg)

By running rs.status command we have the below:

…

…

members: [

{

_id: 0,

name: '127.0.0.1:27101',

health: 1,

state: 1,

stateStr: 'PRIMARY',

…

…

},

{

_id: 1,

name: '127.0.0.1:27102',

health: 1,

state: 2,

stateStr: 'SECONDARY',

…

…

},

{

_id: 2,

name: '127.0.0.1:27100',

health: 1,

state: 7,

stateStr: 'ARBITER',

…

…

},

{

_id: 3,

name: '127.0.0.1:27103',

health: 1,

state: 2,

stateStr: 'SECONDARY',

…

…

},

{

_id: 4,

name: '127.0.0.1:27104',

health: 1,

state: 2,

stateStr: 'SECONDARY',

…

…

}

],

ok: 1,

…

…

repl01

[direct: secondary] test> cfg=rs.conf()

{

_id: 'repl01',

version: 7,

term: 6,

members: [

{

_id: 0,

host: '127.0.0.1:27101',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 1,

tags: {},

slaveDelay: Long("0"),

votes: 1

},

{

_id: 1,

host: '127.0.0.1:27102',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 0.5,

tags: {},

slaveDelay: Long("0"),

votes: 1

},

{

_id: 2,

host: '127.0.0.1:27100',

arbiterOnly: true,

buildIndexes: true,

hidden: false,

priority: 0,

tags: {},

slaveDelay: Long("0"),

votes: 1

},

{

_id: 3,

host: '127.0.0.1:27103',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 0.5,

tags: {},

slaveDelay: Long("0"),

votes: 1

},

{

_id: 4,

host: '127.0.0.1:27104',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 0.5,

tags: {},

slaveDelay: Long("0"),

votes: 1

}

],

protocolVersion: Long("1"),

writeConcernMajorityJournalDefault: true,

settings: {

chainingAllowed: true,

heartbeatIntervalMillis: 2000,

heartbeatTimeoutSecs: 10,

electionTimeoutMillis: 10000,

catchUpTimeoutMillis: -1,

catchUpTakeoverDelayMillis: 30000,

getLastErrorModes: {},

getLastErrorDefaults: { w: 1, wtimeout: 0

},

replicaSetId:

ObjectId("614cfcc67db73190be7daa50")

}

}

repl01

[direct: secondary] test>

3. Configuring

MongoDB Sharding

3.1.

Why sharding

In replication,

- all writes go to master node

- Latency sensitive queries still go to

master

- Single replica set has limitation of 12

nodes

- Memory can't be large enough when active

dataset is big

- Local disk is not big enough

- Vertical scaling is too expensive

3.2.

Architecture

On this tutorial, we consider the former

replica set with:

- One master instance

- Three secondaries’ instances

- An arbiter

Now, we will create:

- One replicat set with three config

instances

- One mongos instance

- An additional replicat set with one

master and two secondary nodes.

3.3.

Creation of a replicat set with config

servers

To achieve that, we will follow steps as

below:

- Create directories for config server

replica set

mkdir -p /opt/data/replcfg/ instcfg01

mkdir -p /opt/data/replcfg/ instcfg02

mkdir -p /opt/data/replcfg/ instcfg03

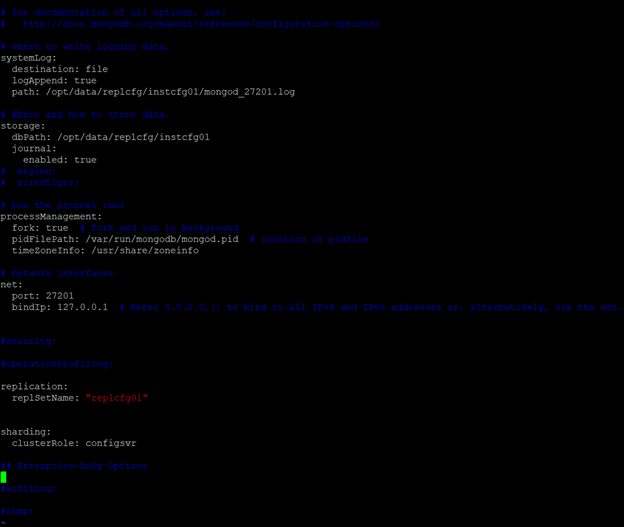

- Creation of config server instances

The configuration file for the config

server is as follow:

We start the config instances using

mongod:

- Initiation of config server replication

[root@linuxsvr01

replcfg]# mongosh --port 27201

Current

Mongosh Log ID: 6153e9ac7b64256f746b9c2e

Connecting

to:

mongodb://127.0.0.1:27201/?directConnection=true&serverSelectionTimeoutMS=2000

Using

MongoDB: 5.0.2

Using

Mongosh: 1.0.5

For

mongosh info see: https://docs.mongodb.com/mongodb-shell/

------

The server generated these startup warnings

when booting:

2021-09-29T00:00:13.882-04:00: Using the XFS

filesystem is strongly recommended with the WiredTiger storage engine. See

http://dochub.mongodb.org/core/prodnotes-filesystem

2021-09-29T00:00:14.846-04:00: Access

control is not enabled for the database. Read and write access to data and

configuration is unrestricted

2021-09-29T00:00:14.846-04:00: You are

running this process as the root user, which is not recommended

2021-09-29T00:00:14.846-04:00:

/sys/kernel/mm/transparent_hugepage/enabled is 'always'. We suggest setting it

to 'never'

2021-09-29T00:00:14.846-04:00: Soft rlimits

for open file descriptors too low

------

Warning:

Found ~/.mongorc.js, but not ~/.mongoshrc.js. ~/.mongorc.js will not be loaded.

You may want to copy or rename ~/.mongorc.js

to ~/.mongoshrc.js.

replcfg01

[direct: primary] test>

3.4.

Creation of mongos instance

Mongos instances is also called router

instances. They are basically mongo instances, interface with client

applications and direct operations to the appropriate shard. The query router

processes and targets the operations to shards and then returns results to the

clients. A sharded cluster can contain more than one query router to divide the

client request load. A client sends requests to one query router. Generally, a

sharded cluster have many query routers.

Now, we start the mongos instance using

the config servers created above.

mongos --port 27300 --fork --logpath

/opt/data/mongos/mongos_27300.log

--configdb

replcfg01/127.0.0.1:27201,127.0.0.1:27202,127.0.0.1:27203

Now, we an connect on our mongos server

using mongosh shell command.

3.5.

Adding Shards to Mongos

Shards are used to store data. They

provide high availability and data consistency. In production environment, each

shard is a separate replica set as follow:

To add shards in mongos, we use sh.addShard() method using the admin database.

[direct: mongos]

test> use admin

switched to db admin

[direct: mongos]

admin>

sh.addShard( "repl01/127.0.0.1:27101,127.0.0.1:27102,127.0.0.1:27103,127.0.0.1:27104,127.0.0.1:27100");

To see the status of the sharding status, we run the command sh.status() under the mongos prompt:

[direct: mongos]

admin> sh.status()

shardingVersion

{

_id: 1,

minCompatibleVersion: 5,

currentVersion: 6,

clusterId:

ObjectId("6153e945fbd96b9e59d869dd")

}

---

shards

[

{

_id: 'repl01',

host:

'repl01/127.0.0.1:27101,127.0.0.1:27102,127.0.0.1:27103,127.0.0.1:27104',

state: 1,

topologyTime: Timestamp({ t: 1632895183, i:

1 })

}

]

---

active mongoses

[ { '5.0.2': 1 } ]

---

autosplit

{ 'Currently enabled':

'yes' }

---

balancer

{

'Currently running': 'no',

'Currently enabled': 'yes',

'Failed balancer rounds in last 5 attempts':

0,

'Migration Results for the last 24 hours':

'No recent migrations'

}

---

databases

[

{

database: {

_id: 'compta',

primary: 'repl01',

partitioned: false,

version: {

uuid:

UUID("9bc29788-fc55-4b6f-8a10-1794a90febd1"),

timestamp: Timestamp({ t: 1632895183,

i: 2 }),

lastMod: 1

}

},

collections: {}

},

{

database: { _id: 'config', primary:

'config', partitioned: true },

collections: {

'config.system.sessions': {

shardKey: { _id: 1 },

unique: false,

balancing: true,

chunkMetadata: [ { shard: 'repl01',

nChunks: 1024 } ],

chunks: [

'too many chunks to print, use

verbose if you want to force print'

],

tags: []

}

}

}

]

[direct: mongos]

admin>

3.6.

Adding additional replica set nodes to sharding

Let’s add another 3 nodes replica set repl02 on our sharding cluster.

[root@linuxsvr01 ~]# mongod --replSet

repl02 --journal --port 27401 --shardsvr --dbpath /opt/data/repl02/inst01

--logpath /opt/data/repl02/inst01/mongod_27401.log –fork

about to fork child

process, waiting until server is ready for connections.

forked process: 57918

child process started

successfully, parent exiting

[root@linuxsvr01 ~]#

[root@linuxsvr01 ~]# mongod --replSet

repl02 --journal --port 27402 --shardsvr --dbpath /opt/data/repl02/inst02

--logpath /opt/data/repl02/inst01/mongod_27402.log –fork

about to fork child

process, waiting until server is ready for connections.

forked process: 57984

child process started

successfully, parent exiting

[root@linuxsvr01 ~]#

[root@linuxsvr01 ~]# mongod --replSet

repl02 --journal --port 27403 --shardsvr --dbpath /opt/data/repl02/inst03 --logpath

/opt/data/repl02/inst01/mongod_27403.log –fork

about to fork child

process, waiting until server is ready for connections.

forked process: 58045

child process started

successfully, parent exiting

[root@linuxsvr01 ~]#

[root@linuxsvr01 ~]# mongosh --port 27401

Current Mongosh Log ID: 61541eeee1fbd30b59fba380

Connecting to:

mongodb://127.0.0.1:27401/?directConnection=true&serverSelectionTimeoutMS=2000

Using MongoDB:

5.0.2

Using Mongosh:

1.0.5

For mongosh info see:

https://docs.mongodb.com/mongodb-shell/

------

The server

generated these startup warnings when booting:

2021-09-29T03:55:13.573-04:00: Using the XFS filesystem is strongly

recommended with the WiredTiger storage engine. See http://dochub.mongodb.org/core/prodnotes-filesystem

2021-09-29T03:55:14.537-04:00: Access control is not enabled for the

database. Read and write access to data and configuration is unrestricted

2021-09-29T03:55:14.537-04:00: You are running this process as the root

user, which is not recommended

2021-09-29T03:55:14.537-04:00: This server is bound to localhost. Remote

systems will be unable to connect to this server. Start the server with

--bind_ip <address> to specify which IP addresses it should serve

responses from, or with --bind_ip_all to bind to all interfaces. If this

behavior is desired, start the server with --bind_ip 127.0.0.1 to disable this

warning

2021-09-29T03:55:14.537-04:00:

/sys/kernel/mm/transparent_hugepage/enabled is 'always'. We suggest setting it

to 'never'

2021-09-29T03:55:14.537-04:00: Soft rlimits for open file descriptors

too low

------

Warning: Found ~/.mongorc.js, but not ~/.mongoshrc.js.

~/.mongorc.js will not be loaded.

You may want

to copy or rename ~/.mongorc.js to ~/.mongoshrc.js.

test>

test> rs.initiate( {

... _id :

"repl02",

... members:

[ {_id : 0, host : "127.0.0.1:27401"},

...

{_id : 1, host : "127.0.0.1:27402"},

...

{_id : 2, host : "127.0.0.1:27403"}

... ]

... })

{ ok: 1 }

repl02 [direct: other] test>

repl02 [direct: primary] test>

[root@linuxsvr01 ~]# mongosh

--port 27300

Current Mongosh Log

ID: 615420cf8b53c829ff63f6d5

Connecting to: mongodb://127.0.0.1:27300/?directConnection=true&serverSelectionTimeoutMS=2000

Using MongoDB: 5.0.2

Using Mongosh: 1.0.5

For mongosh info see:

https://docs.mongodb.com/mongodb-shell/

------

The server generated these startup warnings

when booting:

2021-09-29T00:30:11.523-04:00: Access

control is not enabled for the database. Read and write access to data and

configuration is unrestricted

2021-09-29T00:30:11.523-04:00: You are

running this process as the root user, which is not recommended

2021-09-29T00:30:11.523-04:00: This server

is bound to localhost. Remote systems will be unable to connect to this server.

Start the server with --bind_ip <address> to specify which IP addresses

it should serve responses from, or with --bind_ip_all to bind to all

interfaces. If this behavior is desired, start the server with --bind_ip

127.0.0.1 to disable this warning

------

Warning: Found

~/.mongorc.js, but not ~/.mongoshrc.js. ~/.mongorc.js will not be loaded.

You may want to copy or rename ~/.mongorc.js

to ~/.mongoshrc.js.

[direct: mongos]

test>

[direct: mongos] test> use admin

switched to db admin

[direct: mongos] admin>

[direct: mongos] admin> sh.addShard( "repl02/127.0.0.1:27401,127.0.0.1:27402,127.0.0.1:27403"

)

{

shardAdded: 'repl02',

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t:

1632903493, i: 1 }),

signature: {

hash: Binary(Buffer.from("0000000000000000000000000000000000000000",

"hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t:

1632903492, i: 4 })

}

[direct: mongos] admin>

3.7. Shard a collection

Now we are going for testing our

sharding configuration. We are using test database as follow:

[direct: mongos] test>

[direct: mongos] test> var bulk =

db.big_collection.initializeUnorderedBulkOp();

[direct:

mongos] test> people = ["Mbadi", "Tonye",

"Moussa", "Onana", "Engolo", "Bello",

"Evembe", "Diabongue", "Essomba",

"Biya", "Mengolo", "Um Nyobe"];

[

'Mbadi',

'Tonye',

'Moussa',

'Onana',

'Engolo',

'Bello',

'Evembe',

'Diabongue',

'Essomba', 'Biya',

'Mengolo', 'Um Nyobe'

]

[direct: mongos] test> for(var i=0; i<2000000; i++){user_id =

i;name = people[Math.floor(Math.random()*people.length)];number =

Math.floor(Math.random()*10001);bulk.insert( { "user_id":user_id,

"name":name, "number":number });}

{ nInsertOps: 2000000, nUpdateOps: 0, nRemoveOps: 0, nBatches: 2003 }

[direct: mongos] test>

[direct:

mongos] test> bulk.execute();

{

acknowledged: true,

insertedCount: 2000000,

insertedIds: [

{ index: 0, _id:

ObjectId("615d7e62e3abab4e4107b2ea") },

{ index: 1, _id:

ObjectId("615d7e62e3abab4e4107b2eb") },

{ index: 2, _id: ObjectId("615d7e62e3abab4e4107b2ec")

},

{ index: 3, _id:

ObjectId("615d7e62e3abab4e4107b2ed") },

{ index: 4, _id:

ObjectId("615d7e62e3abab4e4107b2ee") },

{ index: 5, _id:

ObjectId("615d7e62e3abab4e4107b2ef") },

{ index: 6, _id:

ObjectId("615d7e62e3abab4e4107b2f0") },

{ index: 7, _id:

ObjectId("615d7e62e3abab4e4107b2f1") },

{ index: 8, _id:

ObjectId("615d7e62e3abab4e4107b2f2") },

{ index: 9, _id:

ObjectId("615d7e62e3abab4e4107b2f3") },

{ index: 10, _id:

ObjectId("615d7e62e3abab4e4107b2f4") },

{ index: 11, _id:

ObjectId("615d7e62e3abab4e4107b2f5") },

{ index: 12, _id:

ObjectId("615d7e62e3abab4e4107b2f6") },

{ index: 13, _id:

ObjectId("615d7e62e3abab4e4107b2f7") },

{ index: 14, _id:

ObjectId("615d7e62e3abab4e4107b2f8") },

{ index: 15, _id:

ObjectId("615d7e62e3abab4e4107b2f9") },

{ index: 16, _id:

ObjectId("615d7e62e3abab4e4107b2fa") },

{ index: 17, _id: ObjectId("615d7e62e3abab4e4107b2fb")

},

{ index: 18, _id:

ObjectId("615d7e62e3abab4e4107b2fc") },

{ index: 19, _id:

ObjectId("615d7e62e3abab4e4107b2fd") },

{ index: 20, _id:

ObjectId("615d7e62e3abab4e4107b2fe") },

{ index: 21, _id: ObjectId("615d7e62e3abab4e4107b2ff")

},

{ index: 22, _id:

ObjectId("615d7e62e3abab4e4107b300") },

{ index: 23, _id:

ObjectId("615d7e62e3abab4e4107b301") },

{ index: 24, _id:

ObjectId("615d7e62e3abab4e4107b302") },

{ index: 25, _id:

ObjectId("615d7e62e3abab4e4107b303") },

{ index: 26, _id:

ObjectId("615d7e62e3abab4e4107b304") },

{ index: 27, _id:

ObjectId("615d7e62e3abab4e4107b305") },

{ index: 28, _id:

ObjectId("615d7e62e3abab4e4107b306") },

{ index: 29, _id:

ObjectId("615d7e62e3abab4e4107b307") },

{ index: 30, _id:

ObjectId("615d7e62e3abab4e4107b308") },

{ index: 31, _id:

ObjectId("615d7e62e3abab4e4107b309") },

{ index: 32, _id:

ObjectId("615d7e62e3abab4e4107b30a") },

{ index: 33, _id:

ObjectId("615d7e62e3abab4e4107b30b") },

{ index: 34, _id:

ObjectId("615d7e62e3abab4e4107b30c") },

{ index: 35, _id:

ObjectId("615d7e62e3abab4e4107b30d") },

{ index: 36, _id:

ObjectId("615d7e62e3abab4e4107b30e") },

{ index: 37, _id:

ObjectId("615d7e62e3abab4e4107b30f") },

{ index: 38, _id:

ObjectId("615d7e62e3abab4e4107b310") },

{ index: 39, _id:

ObjectId("615d7e62e3abab4e4107b311") },

{ index: 40, _id:

ObjectId("615d7e62e3abab4e4107b312") },

{ index: 41, _id:

ObjectId("615d7e62e3abab4e4107b313") },

{ index: 42, _id:

ObjectId("615d7e62e3abab4e4107b314") },

{ index: 43, _id:

ObjectId("615d7e62e3abab4e4107b315") },

{ index: 44, _id: ObjectId("615d7e62e3abab4e4107b316")

},

{ index: 45, _id:

ObjectId("615d7e62e3abab4e4107b317") },

{ index: 46, _id:

ObjectId("615d7e62e3abab4e4107b318") },

{ index: 47, _id:

ObjectId("615d7e62e3abab4e4107b319") },

{ index: 48, _id: ObjectId("615d7e62e3abab4e4107b31a")

},

{ index: 49, _id:

ObjectId("615d7e62e3abab4e4107b31b") },

{ index: 50, _id:

ObjectId("615d7e62e3abab4e4107b31c") },

{ index: 51, _id:

ObjectId("615d7e62e3abab4e4107b31d") },

{ index: 52, _id: ObjectId("615d7e62e3abab4e4107b31e")

},

{ index: 53, _id:

ObjectId("615d7e62e3abab4e4107b31f") },

{ index: 54, _id:

ObjectId("615d7e62e3abab4e4107b320") },

{ index: 55, _id:

ObjectId("615d7e62e3abab4e4107b321") },

{ index: 56, _id:

ObjectId("615d7e62e3abab4e4107b322") },

{ index: 57, _id:

ObjectId("615d7e62e3abab4e4107b323") },

{ index: 58, _id:

ObjectId("615d7e62e3abab4e4107b324") },

{ index: 59, _id:

ObjectId("615d7e62e3abab4e4107b325") },

{ index: 60, _id:

ObjectId("615d7e62e3abab4e4107b326") },

{ index: 61, _id:

ObjectId("615d7e62e3abab4e4107b327") },

{ index: 62, _id:

ObjectId("615d7e62e3abab4e4107b328") },

{ index: 63, _id:

ObjectId("615d7e62e3abab4e4107b329") },

{ index: 64, _id:

ObjectId("615d7e62e3abab4e4107b32a") },

{ index: 65, _id:

ObjectId("615d7e62e3abab4e4107b32b") },

{ index: 66, _id:

ObjectId("615d7e62e3abab4e4107b32c") },

{ index: 67, _id:

ObjectId("615d7e62e3abab4e4107b32d") },

{ index: 68, _id:

ObjectId("615d7e62e3abab4e4107b32e") },

{ index: 69, _id:

ObjectId("615d7e62e3abab4e4107b32f") },

{ index: 70, _id:

ObjectId("615d7e62e3abab4e4107b330") },

{ index: 71, _id:

ObjectId("615d7e62e3abab4e4107b331") },

{ index: 72, _id:

ObjectId("615d7e62e3abab4e4107b332") },

{ index: 73, _id:

ObjectId("615d7e62e3abab4e4107b333") },

{ index: 74, _id:

ObjectId("615d7e62e3abab4e4107b334") },

{ index: 75, _id:

ObjectId("615d7e62e3abab4e4107b335") },

{ index: 76, _id: ObjectId("615d7e62e3abab4e4107b336")

},

{ index: 77, _id:

ObjectId("615d7e62e3abab4e4107b337") },

{ index: 78, _id:

ObjectId("615d7e62e3abab4e4107b338") },

{ index: 79, _id:

ObjectId("615d7e62e3abab4e4107b339") },

{ index: 80, _id: ObjectId("615d7e62e3abab4e4107b33a")

},

{ index: 81, _id:

ObjectId("615d7e62e3abab4e4107b33b") },

{ index: 82, _id:

ObjectId("615d7e62e3abab4e4107b33c") },

{ index: 83, _id:

ObjectId("615d7e62e3abab4e4107b33d") },

{ index: 84, _id: ObjectId("615d7e62e3abab4e4107b33e")

},

{ index: 85, _id:

ObjectId("615d7e62e3abab4e4107b33f") },

{ index: 86, _id:

ObjectId("615d7e62e3abab4e4107b340") },

{ index: 87, _id:

ObjectId("615d7e62e3abab4e4107b341") },

{ index: 88, _id:

ObjectId("615d7e62e3abab4e4107b342") },

{ index: 89, _id:

ObjectId("615d7e62e3abab4e4107b343") },

{ index: 90, _id:

ObjectId("615d7e62e3abab4e4107b344") },

{ index: 91, _id:

ObjectId("615d7e62e3abab4e4107b345") },

{ index: 92, _id:

ObjectId("615d7e62e3abab4e4107b346") },

{ index: 93, _id:

ObjectId("615d7e62e3abab4e4107b347") },

{ index: 94, _id:

ObjectId("615d7e62e3abab4e4107b348") },

{ index: 95, _id:

ObjectId("615d7e62e3abab4e4107b349") },

{ index: 96, _id:

ObjectId("615d7e62e3abab4e4107b34a") },

{ index: 97, _id:

ObjectId("615d7e62e3abab4e4107b34b") },

{ index: 98, _id:

ObjectId("615d7e62e3abab4e4107b34c") },

{ index: 99, _id:

ObjectId("615d7e62e3abab4e4107b34d") },

... 1999900 more items

],

matchedCount: 0,

modifiedCount: 0,

deletedCount: 0,

upsertedCount: 0,

upsertedIds: []

}

[direct:

mongos] test>

[direct: mongos] test> sh.enableSharding( "test" )

{

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t:

1633517943, i: 2 }),

signature: {

hash:

Binary(Buffer.from("0000000000000000000000000000000000000000",

"hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t:

1633517943, i: 2 })

}

[direct: mongos] test> db.big_collection.createIndex( { number : 1

} )

number_1

[direct: mongos] test>

[direct: mongos]

test>

sh.shardCollection("test.big_collection",{"number":1});

{

collectionsharded: 'test.big_collection',

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1633518159, i:

4 }),

signature: {

hash: Binary(Buffer.from("0000000000000000000000000000000000000000",

"hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t: 1633518158, i:

6 })

}

[direct:

mongos] test>

[direct:

mongos] test> db.printShardingStatus()

shardingVersion

{

_id: 1,

minCompatibleVersion: 5,

currentVersion: 6,

clusterId:

ObjectId("6153e945fbd96b9e59d869dd")

}

---

shards

[

{

_id: 'repl01',

host:

'repl01/127.0.0.1:27101,127.0.0.1:27102,127.0.0.1:27103,127.0.0.1:27104',

state: 1,

topologyTime: Timestamp({ t: 1632895183, i:

1 })

},

{

_id: 'repl02',

host:

'repl02/127.0.0.1:27401,127.0.0.1:27402,127.0.0.1:27403',

state: 1,

topologyTime: Timestamp({ t: 1632903492, i:

2 })

}

]

---

active

mongoses

[ {

'5.0.2': 1 } ]

---

autosplit

{

'Currently enabled': 'yes' }

---

balancer

{

'Currently running': 'no',

'Failed balancer rounds in last 5 attempts':

4,

'Last reported error': 'Could not find host

matching read preference { mode: "primary" } for set repl02',

'Time of Reported error':

ISODate("2021-10-06T09:20:01.132Z"),

'Currently enabled': 'yes',

'Migration Results for the last 24 hours':

'No recent migrations'

}

---

databases

[

{

database: {

_id: 'compta',

primary: 'repl01',

partitioned: false,

version: {

uuid:

UUID("9bc29788-fc55-4b6f-8a10-1794a90febd1"),

timestamp: Timestamp({ t: 1632895183,

i: 2 }),

lastMod: 1

}

},

collections: {}

},

{

database: { _id: 'config', primary:

'config', partitioned: true },

collections: {

'config.system.sessions': {

shardKey: { _id: 1 },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'repl01', nChunks: 512 },

{ shard: 'repl02', nChunks: 512 }

],

chunks: [

'too many chunks to print, use

verbose if you want to force print'

],

tags: []

}

}

},

{

database: {

_id: 'people',

primary: 'repl02',

partitioned: true,

version: {

uuid:

UUID("0fb50b4f-1556-4b7f-8d22-db4f90748f4a"),

timestamp: Timestamp({ t: 1632908273,

i: 2 }),

lastMod: 1

}

},

collections: {}

},

{

database: {

_id: 'test',

primary: 'repl01',

partitioned: true,

version: {

uuid:

UUID("720d5514-53f3-473c-a807-0326bc2335c5"),

timestamp: Timestamp({ t: 1633438992,

i: 1 }),

lastMod: 1

}

},

collections: {

'test.big_collection': {

shardKey: { number: 1 },

unique: false,

balancing: true,

chunkMetadata: [ { shard: 'repl01',

nChunks: 4 } ],

chunks: [

{ min: { number: MinKey() }, max: {

number: 2622 }, 'on shard': 'repl01', 'last modified': Timestamp({ t: 1, i: 0

}) },

{ min: { number: 2622 }, max: {

number: 5246 }, 'on shard': 'repl01', 'last modified': Timestamp({ t: 1, i: 1

}) },

{ min: { number: 5246 }, max: {

number: 7869 }, 'on shard': 'repl01', 'last modified': Timestamp({ t: 1, i: 2

}) },

{ min: { number: 7869 }, max: {

number: MaxKey() }, 'on shard': 'repl01', 'last modified': Timestamp({ t: 1, i:

3 }) }

],

tags: []

}

}

}

]

[direct:

mongos] test>

[direct:

mongos] test> db.big_collection.getShardDistribution();

Shard

repl01 at

repl01/127.0.0.1:27101,127.0.0.1:27102,127.0.0.1:27103,127.0.0.1:27104

{

data: '122.22MiB',

docs: 2000000,

chunks: 3,

'estimated data per chunk': '40.74MiB',

'estimated docs per chunk': 666666

}

---

Shard

repl02 at repl02/127.0.0.1:27401,127.0.0.1:27402,127.0.0.1:27403

{

data: '32.04MiB',

docs: 524268,

chunks: 1,

'estimated data per chunk': '32.04MiB',

'estimated docs per chunk': 524268

}

---

Totals

{

data: '154.26MiB',

docs: 2524268,

chunks: 4,

'Shard repl01': [

'79.23 % data',

'79.23 % docs in cluster',

'64B avg obj size on shard'

],

'Shard repl02': [

'20.76 % data',

'20.76 % docs in cluster',

'64B avg obj size on shard'

]

}

[direct:

mongos] test>

Thank you for providing this blog really appreciate the efforts you have taken into curating this article if you want you can check out data science course in bangalore they have a lot to offer with regards to data science in terms of training and live projects.

RépondreSupprimer